Greetings from a world where…

my Hawkeyes no longer have a chance to sneak into the college football playoff

…As always, the searchable archive of all past issues is here. Please please subscribe here to support ChinAI under a Guardian/Wikipedia-style tipping model (everyone gets the same content but those who can pay support access for all AND compensation for awesome ChinAI contributors).

Machine Failing: How Systems Acquisition and Software Development Flaws Contribute to Military Accidents

Last year around this time, when I was testifying before the U.S. Senate Intelligence Committee on the national security implications of AI, Senator Marco Rubio expressed worries (see the 1 hour, 2 min. mark) about whether authoritarian countries can put in place the appropriate guardrails for military AI systems to prevent accidental or unintended conflict escalation. A 2023 Foreign Affairs article echoes his concerns, “Due to Beijing’s lax approach toward technological hazards and its chronic mismanagement of crises, the danger of AI accidents is most severe in China.”

Just published in Texas National Security Review, my latest article — “Machine Failing: How Systems Acquisition and Software Development Flaws Contribute to Military Accidents” — intervenes in this debate from a different perspective. It simply asks: how does software contribute to military accidents?

Instead of isolating regime type as the key cause of military accidents, my argument highlights the software development lifecycle. When military acquisition follows a "waterfall" model that limits end-user feedback, it causes human-machine interaction issues (e.g. unwieldy interface designs), inhibits opportunities to discover unanticipated vulnerabilities, and limits capacity to address known safety risks revealed after testing and deployment.

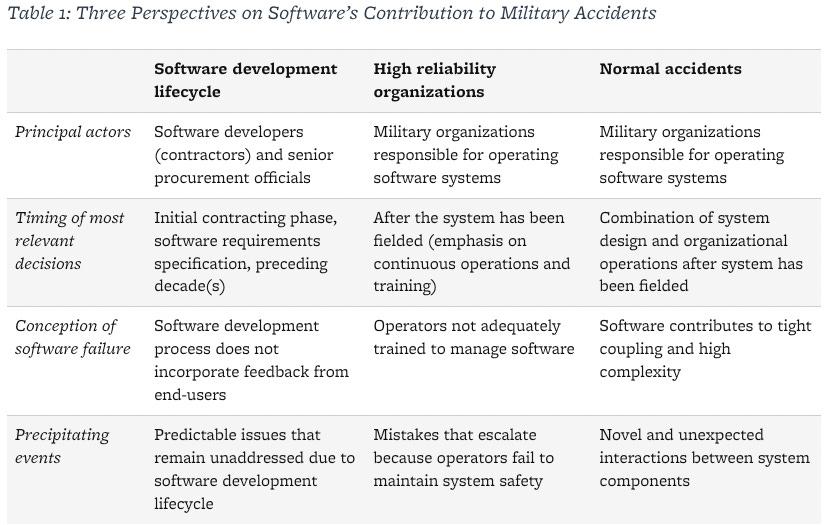

My theory builds on existing scholarship, especially Scott Sagan's seminal work The Limits of Safety, which tested normal accident theory (NAT) against high reliability organization (HRO) theory on the safety of U.S. nuclear weapons management. While this was a landmark contribution, debates between the NAT and HRO perspectives tend to concentrate on whether accidents are inevitable in complex technological systems, as opposed to how certain technologies produce accidents. By focusing on patterns of software development, I shed light on causal factors that affect military accidents often overlooked by NAT and HRO scholars (see Table 1 below for key differences between the three approaches)

Poring over post-accident reports that blamed crews and captains, one thing stood out: the organizations that built the software systems (big defense contractors) always escaped blame or even mention. “Machine Failing” argues that we should extend causal timeline beyond decisions made on the battlefield to ones made decades earlier in software development.

Rather than seeing AI accidents as something that can only happen “over there” or in countries that lack the guardrails of the U.S. system, I test my argument on four cases that involved the U.S. military: the 1988 Vincennes shootdown, the 2003 Patriot fratricides, the 2017 USS McCain collision, and the 2021 Kabul airlift. Sources include recently declassified documents and interviews with contractors and military officials who developed and tested these systems. See the following screenshot for a taste of the empirical evidence presented throughout the cases.

Lastly, the conclusion draws out implications of my theory for military AI accidents. Here, we often gravitate toward the novel risks that AI poses. “Machine Failing” suggests that some of the most grave risks are ones we haven't fixed from past software-intensive systems.

FULL ARTICLE HERE: Machine Failing: How Systems Acquisition and Software Development Flaws Contribute to Military Accidents

ChinAI Links (Four to Forward)

Should-read: AI’s $600B Question

In Sequoia’s blog, David Cahn explores the gap between the revenue expectations implied by AI infrastructure spending and the actual revenue growth in the AI ecosystem. From the piece:

But we need to make sure not to believe in the delusion that has now spread from Silicon Valley to the rest of the country, and indeed the world. That delusion says that we’re all going to get rich quick, because AGI is coming tomorrow, and we all need to stockpile the only valuable resource, which is GPUs.

Should-read: The New Artificial Intelligentsia

Ruha Benjamin, Alexander Stewart 1886 Professor of African American Studies at Princeton University, criticizes how AI evangelists “wrap their self-interest in the cloak of humanistic concern.” It’s a thought-provoking critique of a lot of organizations and ideas (e.g., Future of Humanity Institute, effective altruism, longtermism) where I first encountered this AI governance field, though I think it paints people concerned about AI safety with too broad a brush. This is the fifth essay in the Los Angeles Review of Books Legacies of Eugenics series.

Should-read: On Hilke Schellmann’s “The Algorithm”

Benjamin’s piece prompted me to explore the Los Angeles Review of Books science and technology category, which had a lot of great stuff. This review of Schellman’s book on how we are giving AI too much control in the hiring process was really fascinating.

Should-attend: UCLA Burkle Center webinar on my book

Next stop on the book tour: a virtual talk hosted by UCLA’s Burkle Center for International Relations — Wednesday, October 23, 2024 12:30 PM (Pacific Time). Thanks to everyone who is diffusing the book!

Thank you for reading and engaging.

These are Jeff Ding's (sometimes) weekly translations of Chinese-language musings on AI and related topics. Jeff is an Assistant Professor of Political Science at George Washington University.

Check out the archive of all past issues here & please subscribe here to support ChinAI under a Guardian/Wikipedia-style tipping model (everyone gets the same content but those who can pay for a subscription will support access for all).

Also! Listen to narrations of the ChinAI Newsletter in podcast format here.

Any suggestions or feedback? Let me know at chinainewsletter@gmail.com or on Twitter at @jjding99