ChinAI #291: Chinese open source models lead foreign ones, closing in on global first-tier closed source models

Greetings from a world where…

The Hunchback of Notre Dame is a severely underrated movie

…As always, the searchable archive of all past issues is here. Please please subscribe here to support ChinAI under a Guardian/Wikipedia-style tipping model (everyone gets the same content but those who can pay support access for all AND compensation for awesome ChinAI contributors).

Feature Translation: Chinese Large Model Benchmark Evaluation October 2024 Report

Context: The clear-cut winner of last issue’s Around the Horn roundup was the SuperCLUE benchmark update. We’ve been regularly following this Chinese language understanding evaluation team since May 2023 (ChinAI #224). Notably, in their October 2024 report (link to original Chinese), SuperCLUE brands itself as an “independent, 3rd-party Artificial General Intelligence (AGI) evaluation organization” with the mission of “Accurately quantifying the progress of AGI, defining the roadmap for humanity's journey towards AGI.”

Key Takeaways: Over the past year and a half, the gap in general capabilities between the top Chinese and international models has continued to shrink — from a gap of 30.12% in May 2023 to 1.29% in August of 2024. (Note: this is on Chinese-language tasks)

However, with the release of OpenAI’s o1, as the last uptick in the image below shows, the gap has once again widened to 8%.

There is still a large gap when it comes to “Hard tasks” such as high-level reasoning and precise instruction following. The gap between o1-preview’s score on the SuperCLUE-Hard benchmark (64.89 points) and that of the top Chinese model, GLM-4Plus (51.09), is quite substantial.

Here’s an example of a difficult high-level reasoning task (slide 26 of full report): A company plans to host a large conference, with a goal of maximizing the number of attendees while also not surpassing a set budget amount. We know: Venue rental fee = r RMB/square meters; Venue area = A square meters; Food and drink cost for each attendee = c RMB; The total venue rental cost and food/drink cost should not surpass 1,000,000 RMB; The max number of people the venue can accommodate is P; The venue rental fee is related to the number of attendees, with a relationship formalized as r = k \cdot p \, with k as a constant; How do you set p to enable the most number of attendees? Please use the Lagrange multiplier method to find the solution.

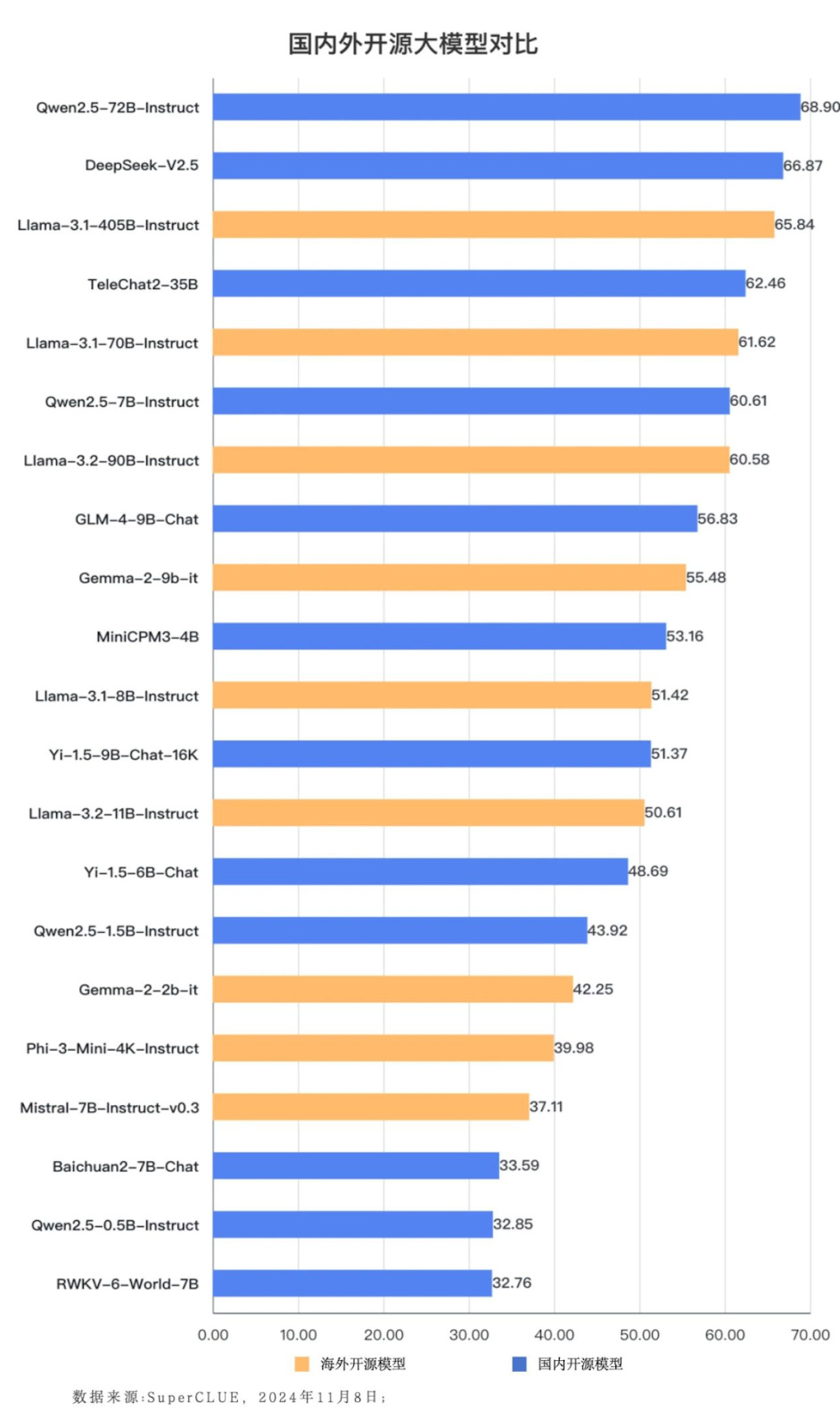

On Chinese-language prompts, Chinese open source models like Qwen2.5 (Alibaba’s model) and DeepSeek-V2.5 outperform their international competitors, including Llama’s best models.

Screenshot above shows Chinese open source LLMs (in blue) compared to international open source models (in yellow). In fact, Chinese open source models are nearing the performance of the world’s top closed-source models on SuperCLUE: “Qwen2.5-72B-Instruct scored 68.90 points, which is 2.34 points lower than the average of the top 5 closed source models in the world”

This aligns with the trend of small-sized models exhibiting strong progress, possibly providing the best “bang-for-buck”, or balance between performance and power consumption for developers. In SuperCLUE’s ranking of models under 10B parameters (slide 38 of full report), for instance, both Qwen2.5-7B-Instruct and GLM-4-9B-chat ranked higher than Google’s Gemma-2-9b-it model.

FULL-ish Translation including some other notes I took on interesting slides from: Chinese Large Model Benchmark Evaluation October 2024 Report

ChinAI Links (Four to Forward)

Must-use: CSET-ETO Chinese Technical Glossary

So cool to see this glossary of 15,000+ terms (yes, at first, I thought 1,500 was impressive enough, but that extra zero is real!) from Chinese primary sources on technology and security, with expert translations and annotations by CSET's translation team. It’s created and maintained by Ben Murphy with support from the Emerging Technology Observatory.

Should-read: The US Can Win Without Compromising AI Safety

Claudia Wilson, Senior Policy Analyst at the Center for AI Policy, makes a powerful argument that there is no tradeoff between AI safety and U.S. primacy. This was a cool statistic from the piece:

Safety testing is highly affordable relative to training costs and thus unlikely to slow innovation. Google’s Gemini Model was estimated to have cost $191 million in training costs, ignoring other significant costs like ongoing inference and well-compensated staff. In contrast, a high-quality set of pre-deployment evaluations could be conducted for around $235,000, which would be 0.12% of Gemini’s training costs.

Tech Policy Press is quickly becoming a publication that I browse multiple times a week.

Should-read: Long interview with DeepSeek founder Liang Wenfeng

Zihan Wang, first-year CS PhD student at Northwestern, translated a Chinese-language profile of DeepSeek, one of the Chinese AI companies that has really impressed lately (including on the October SuperCLUE benchmark above). H/t to Jack Clark’s ImportAI newsletter for sharing.

Should-attend: Beijing book talk events

The most rewarding and toughest semester of my college experience was directly enrolling in classes at Beida (Peking University) through the CIEE advanced Chinese studies program. This week, I’ll be back in Beijing to do a talk at Beida and a few other places (Tsinghua CISS event), organized by Princeton University Press’s China office.

Thank you for reading and engaging.

These are Jeff Ding's (sometimes) weekly translations of Chinese-language musings on AI and related topics. Jeff is an Assistant Professor of Political Science at George Washington University.

Check out the archive of all past issues here & please subscribe here to support ChinAI under a Guardian/Wikipedia-style tipping model (everyone gets the same content but those who can pay for a subscription will support access for all).

Also! Listen to narrations of the ChinAI Newsletter in podcast format here.

Any suggestions or feedback? Let me know at chinainewsletter@gmail.com or on Twitter at @jjding99