ChinAI #317: Chinese AI models disable answers to Gaokao questions

Greetings from a world where…

DC summer is making itself known to my armpits

…As always, the searchable archive of all past issues is here. Please please subscribe here to support ChinAI under a Guardian/Wikipedia-style tipping model (everyone gets the same content but those who can pay support access for all AND compensation for awesome ChinAI contributors).

Feature Translation: AI large models can’t solve Gaokao questions, nor do they dare to solve them

Context: In tabulating the votes for Around the Horn, it was a close call, but I ended up choosing the TMTPost article (link to original Chinese) on the restrictive measures taken by Chinese generative AI platforms during the Gaokao (national college entrance exam period). *I appreciate that folks were interested in the essay on historical patterns in the rise and fall of great powers, but there wasn’t much of a clear AI connection. I think this is a very interesting case that illuminates how Chinese platforms approach technical governance and content security. In terms of the significance, the article notes, “Mainstream Chinese large model developers have actively or passively taken restrictive measures on Gaokao-related prompts, marking a first in the development process of Chinese large models.”

Key Takeaways: What was the scope of these restrictions? TMT Post prompted some of the most popular Chinese models to answer math questions from this year’s Gaokao.

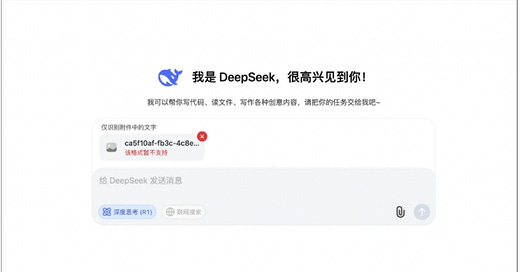

As the below screenshots of DeepSeek and ByteDance’s Doubao depict, some large models disabled the function to upload test questions and respond to the upload image.

Alibaba’s Qwen 3 gave a specific time range for when this function was disabled: 8:00-11:30AM and 1:00pm-6:30PM from June 7 to June 10 — corresponding to the Gaokao exam period.

According to TMT Post, DeepSeek implemented stricter restrictions (screenshot below), even not answering questions about the general characteristics of this year’s Gaokao exam. However, it did give a full response, when the user specified that they were already employed: “I’m already working, I want to understand trends of this year’s Gaokao.”

Why did DeepSeek, Alibaba, ByteDance, Moonshot AI, and others all disable this functionality?

The simple, though unverified, answer is that they received guidance from the government to prevent cheating during the Gaokao, which is “an institutional pillar of education equity and societal stability.” Relatedly, this April, CCTV News, China’ news channel with the largest reach, broadcasted a segmented titled, “How to prevent AI glasses from becoming a 'magic weapon' for cheating in exams?”

The second, related, answer is that regulatory authorities have been scrutinizing companies that traffic on misinformation related to AI and the Gaokao. The Ministry of Education, the Cyberspace Administration of China, and the Ministry of Public Security investigated businesses that sold “Gaokao predicted questions banks” that used AI to guess what would appear on the exam, capitalizing on the stress and worries of students and parents.

The third, possibly more interesting, answer is that these restrictions “may not be completely ‘forced’ behavior”. Since large models still struggle to generate consistent answers to standardized test questions on math and physics, these prompts may expose the model’s weaknesses to a broader public. I’m not too convinced by this answer, as these functionalities were restored after the Gaokao period.

FULL TRANSLATION, which also includes some translations of selected WeChat comments: AI large models can’t solve gaokao questions, nor do they dare to solve them

ChinAI Links (Four to Forward)

Should-attend: ImportAI on China’s heterogenous compute cluster

A recent issue of Jack Clark’s great newsletter included this nugget: “Chinese researchers stitch a data center together out of four different undisclosed chips.” Very relevant to our recent series on new-style AI infrastructure.

Should-attend: Can Multinationals Win in China? Lessons from Apple’s Experience

On July 2, 9AM-10AM, CSIS is hosting a webinar on Patrick McGee’s recent book Apple in China: The Capture of the World’s Greatest Company. I’ll be providing feedback alongside Meg Rithmire (James E. Robison Professor, Harvard Business School) and James McGregor (Chairman, Greater China, APCO).

Should-attend: Guidelines for the Use of Generative AI in Primary and Secondary Schools (in Chinese)

Very relevant to this week’s issue, last month, China’s Ministry of Education published these guidelines for the use of generative AI. From my quick scan, one thing that stood out was the focus on educational equity. H/t to JC for sharing.

Should-read: Artificial Eyes - Generative AI in China’s Military Intelligence

For Recorded Future, senior threat intelligence analyst Zoe Haver’s report assesses the PLA's interest in using generative AI for intelligence purposes, analyzes efforts by the PLA and China's defense industry to develop and apply generative AI-based intelligence tools, and profiles relevant defense industry entities. The section on “Generative AI military intelligence providers” was especially useful.

Thank you for reading and engaging.

These are Jeff Ding's (sometimes) weekly translations of Chinese-language musings on AI and related topics. Jeff is an Assistant Professor of Political Science at George Washington University.

Check out the archive of all past issues here & please subscribe here to support ChinAI under a Guardian/Wikipedia-style tipping model (everyone gets the same content but those who can pay for a subscription will support access for all).

Also! Listen to narrations of the ChinAI Newsletter in podcast format here.