ChinAI Newsletter #2: The Language Asymmetry in AI Policy

Welcome to the ChinAI Newsletter!

These are Jeff Ding's weekly translations of writings on AI policy and strategy from Chinese thinkers. I'll also include general links to all things at the intersection of China and AI. Please share the subscription link if you think this stuff is cool.

I'm a grad student at the University of Oxford where I lead the Future of Humanity Institute - Governance of AI Program's research on China AI happenings.

The language asymmetry in AI policy

This week’s edition will focus on what I call the language asymmetry in AI policy. Andrew Ng referenced the language asymmetry with regards to AI technical research in an article in The Atlantic, “The language issue creates a kind of asymmetry: Chinese researchers usually speak English so they have the benefit of access to all the work disseminated in English. The English-speaking community, on the other hand, is much less likely to have access to work within the Chinese AI community. ‘China has a fairly deep awareness of what’s happening in the English-speaking world, but the opposite is not true,’ says Ng.”

Let’s take the “Malicious Use of Artificial Intelligence report,” co-authored by some of my colleagues at FHI, as a running example. If you haven’t heard of it, you should definitely read it, as I think it’s a critical contribution to the field of AI governance.

Running Example: Chinese Coverage of Malicious Use Report

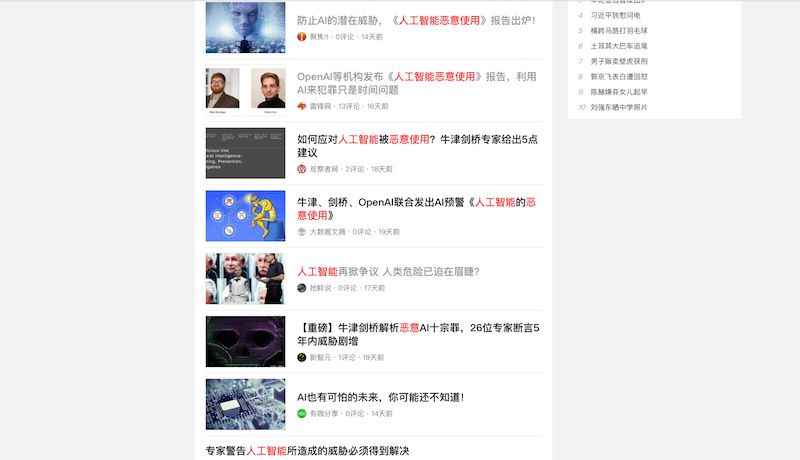

Above is a screenshot of what came up when I searched “人工智能恶意使用”[translates to: Malicious Use of Artificial Intelligence] in Jinri Toutiao, which is China’s most popular news aggregator (120 million daily active users who spend an average of 74 min./day on the app). All of the pieces in this screenshot are articles on the report and there are many more below where the screenshot cuts off. Two of these pieces were published on February 22, which is one day! after the Malicious Use report was published on FHI’s site. One day. That’s the turnaround time.

The article that gave the most comprehensive treatment of the report was published two days after the report’s release. It comes from leiphone, a media platform founded in 2011 that focuses on providing analysis on future trends in Chinese innovation. They had read Miles and Shahar’s opinion article in Wired before writing this piece and provide a good summary of the 101-pg report. If you scroll down to the end of the article, there’s even a section with screenshots of Miles talking to people on Twitter about hidden Eastern Eggs in the report (anagrams of the two lead co-author’s names).

Sure, it makes sense that a well-financed, new media platform with 100,000s of followers would cover this report, but I want to emphasize is that this is not just media outlets who are involved in these discussions. These are ordinary individuals who are interested in these issues as well. Let’s take a look at this article on the Malicious Use report. You can check out my translation of this person’s post here. The author of this article goes by the handle qiangxianshuo [“fighting for fresh talk”] – user profile here. Most of this author’s other posts are about photography – they are big fans of Wim Wenders’ Polaroids, according to their bio. But this is the point – you have new media companies, old media companies, big tech companies with their own media divisions, small tech companies with their own media divisions, photographers who take an interest in AI all contributing to a community that vigorously discusses topics like the Malicious Use report.

Here’s one last example by way of newsletter subscriber Danit Gal. On March 7, Fei-fei Li published an op-ed in the New York Times titled “How to Make A.I. that’s Good for People”. On March 9, a translation of the op-ed was posted on a Wechat digest. Again, a two-day turnaround. This time, the author is currently a stay-at-home mom, who did her Master’s in genetics.

Jeff's Translation of Random Toutiao Post on Malicious Use of AI Report

This Week's ChinAI Links

Exciting stuff happening at CNAS with the launch of their AI initiative: https://www.cnas.org/research/technology-and-national-security/artificial-intelligence

CSIS is also doing some great work; Samm Sack's article in the emerging debate over data privacy in China is essential reading - based on exchange with lead drafter of the new privacy standard: https://www.csis.org/analysis/chinas-emerging-data-privacy-system-and-gdpr

Some followup to my Deciphering China's AI Dream report, which was published last week:

Hackernews had a good discussion thread: https://news.ycombinator.com/item?id=16611931

Will Knight of MIT Technology Review wrote a great piece on China's aim to lead in shaping in AI-related technical standards:

https://www.technologyreview.com/s/610546/china-wants-to-shape-the-global-future-of-artificial-intelligence/

Link to Jeff's Report: Deciphering China's AI Dream

Thank you for reading and engaging.

Please feel free to comment on any of my translations in the Google docs, and you can contact me at jeffrey.ding@magd.ox.ac.uk or on Twitter at @jjding99