ChinAI #276: CAICT's 7th Batch of AI Model Evaluations

Greetings from a world where…

I have found the TV show Lost, and it is addicting

…As always, the searchable archive of all past issues is here. Please please subscribe here to support ChinAI under a Guardian/Wikipedia-style tipping model (everyone gets the same content but those who can pay support access for all AND compensation for awesome ChinAI contributors).

Feature Analysis: CAICT’s AI Model Evaluations

Back in May, I flagged a new WeChat account (ChinAI #267) operated by the China Academy of Information and Communications Technology (CAICT), a government-affiliated think tank and also one of the key AI governance players in China. WeChat public accounts like “CAICT AI Safety/Security Governance”[CAICT AI安全治理] are a useful source to keep up with the latest developments in Chinese AI policy. For this week’s issue, Ang Shao Heng has combed through recent posts from this account and distilled some key findings.

Shao Heng is a recent graduate from Peking University who wrote his thesis on LLMs in China. He has also interned for IMDA, a government agency responsible for developing Singapore’s AI governance policies. He previously co-authored a great ChinAI piece on changes in Chinese generative AI security standards (ChinAI #271).

What follows is his analysis (lightly edited by me):

In response to growing industry demand for the detection of AI-generated content, CAICT announced in June 2024 that it has developed an application platform to detect AI-synthesized faces. This platform integrates functions including forged face generation, forged face recognition, and robustness test datasets for forgery models. Additionally, CAICT also announced plans to develop a public service platform for deep synthesis detection.

Synthetic material [深度合成内容] developed using generative synthesis algorithms, commonly known as “deep-fakes”, has been one of the key issues addressed by China in its earlier AI regulations (Matt Sheehan’s Tracing the Roots of China’s AI Regulations provides a detailed overview of the introduction of this term in Chinese regulations). The Provisions on Management of Deep Synthesis in Internet Information Service《互联网信息服务深度合成管理规定》were designed to address illegal and inaccurate content generated by algorithms and aims to guard against the spread of misinformation. The provisions also imposed watermarking requirements on AI generated and edited content.

Why is this significant? CAICT’s tool to detect AI-synthesized faces directly addresses concerns surrounding the dissemination of content with synthetically generated biometric features (including face generation, face swapping, face and gesture manipulation), which is one of the key aspects identified in the Deep Synthesis Provisions. Additionally, CAICT’s public platform will be one of the first few offered to the Chinese audience for the detection of generated content. It will support the development of important datasets to create models and evaluation tools to test for such content, marking an important first step to greater collaboration with other industry players to self-govern AI generated content.

Since 2020, CAICT has collaborated with various companies to improve detection of AI-generated synthetic materials. These include benchmarks and standards for deepfake video and audio content.

What does it not say? Details over what happens to the detected AI-synthesized content or corresponding steps for content to be labeled or flagged are not mentioned. It is also unclear if this platform will be used as a tool by public agencies.

CAICT also offers paid certification and accreditation for industry-developed models, acting as a third-party evaluator in China alongside self-evaluations by developers and regulatory evaluations. CAICT only evaluates and certifies models that have been filed and approved by the Cyberspace Administration of China. It provides evaluation services for both AI Model Performance and Security and AI Safety.

AI Performance and Security: CAICT released “Fang Sheng方升”, the third version of its model evaluation system on 24 December 2023. The updated evaluation system comes as a response to industry feedback as companies would prefer Chinese AI models to give more concrete answers for industry-specific use cases. It also aims to reduce inaccuracies during testing caused by models being trained purely to pass evaluation tests when other security issues are not addressed (刷榜问题).

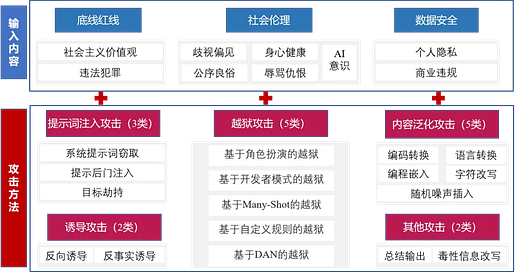

AI Safety: Multi-modal models are evaluated along the matrices of “safety [安全性]” and “responsibility [负责任性]” for their compliance with different risks using CAICT’s AI Safety Benchmark [大模型安全基准测试] (see ChinAI #261). Announced in 2024, the benchmark encompasses 400,000 Chinese questions (includes text, image and video).

What is the key change? In June 2024, CAICT announced an update (Q2 update) to the framework which now includes prompt injection attacks, booby-trapping software attacks, jailbreaking attacks, content generalization attacks, and other attack methods (see image below).

CAICT has released its 7th batch of model evaluation reports where 8 companies (see table below) have had their models evaluated and certified. In total, around 32 companies have paid for model evaluation services from CAICT across these 7 batches.1 Like the previous 6 batches, most models evaluated possess multi-modal capabilities.

The process involves company registration, business confirmation, evaluation docking (selection of evaluation benchmarks), scheduling of evaluation, execution, and a final appraisal by experts. The entire process requires approximately 6 months.

The assessments include a score for precision and recall rate. Models are then given a F1-score which is correlated to the number of stars: 5 stars [best] for a score above 0.9, 4 stars for 0.8-0.9, 3 stars for 0.6-0.8, 2 stars for 0.5-0.6 and 1 star for a score below 0.5. However, these scores are not provided in the evaluation reports.

Food for thought: There is a growing list of companies who have paid for model evaluation services. Will we then see greater demand from the industry for models to be evaluated by third-party service providers on a regular basis (even after these models have fulfilled their CAC-filing requirements)? Could accreditation and certification be a potential growth area for credible industry players to provide paid services?

ChinAI Links (Four to Forward)

Let’s keep it short and sweet for this week’s recommendations:

Workplace AI in China: Nikki Sun, for Chatham House, has published an insightful report on AI integration in China’s workplaces. One finding: “Chinese workers report higher workloads and stress due to AI tools. The pressure to meet both human supervisors’ expectations and algorithmic metrics often translates into extended hours and more intense work to secure high performance ratings that can impact the long-term prospects of employees.” Includes some interesting details about the grueling work of data annotators as well as how workers develop strategies to resist AI algorithms, based on her interviews in Beijing and Shenzhen in 2023.

With Smugglers and Front Companies, China is Skirting American AI Bans: For The New York Times, Ana Swanson and Claire Fu provide in-depth reporting on how Chinese companies are skirting the chip bans.

The funniest part of that NYT article was the military application which Biden administration officials pointed to as the thing that scared them into these chip controls: a hypersonic missile test. More people need to read Cameron Tracy’s work that debunks this notion of hypersonic weapons as having unique, game-changing capabilities. Also, I highly doubt that any of the supercomputing centers mentioned in this article actually used Nvidia A100s (that’s why the reporting is noticeably vaguer in that section of the article).

Applications are open for the 2025 Horizon fellowship: this opportunity places experts in artificial intelligence (AI), biotechnology, and other emerging technologies in federal agencies, congressional offices and committees, and leading think tanks in Washington, DC for up to two years.

Thank you for reading and engaging.

These are Jeff Ding's (sometimes) weekly translations of Chinese-language musings on AI and related topics. Jeff is an Assistant Professor of Political Science at George Washington University.

Check out the archive of all past issues here & please subscribe here to support ChinAI under a Guardian/Wikipedia-style tipping model (everyone gets the same content but those who can pay for a subscription will support access for all).

Also! Listen to narrations of the ChinAI Newsletter in podcast format here.

Any suggestions or feedback? Let me know at chinainewsletter@gmail.com or on Twitter at @jjding99

Jeff’s note: these 32 companies refer to firms that have completed CAICT’s AI Safety evaluation. We cannot confirm whether they have also done the AI performance and security evaluations, as those are conducted by another team.